If you plan to link your Google Analytics property with BigQuery to begin exporting the raw event data collected in your analytics to a Google BigQuery project.

This guide will be helpful for you, not in terms of linking the two tools directly, but rather by outlining nine (9) important steps to take before connecting them.

These steps will help you avoid any potential tool or business challenges during the integration process.

If you have already linked the tools, you can still follow this guide to ensure you’re not overlooking any of the recommendations outlined here.

Here are the nine (9) actions I recommend you check off before linking the two platforms:

- Ensure you have the correct access

- Set up billing details

- Establish a BigQuery budget alert

- Deregister high cardinality dimensions in Google Analytics

- Check for duplicate events

- Document your GA4 event implementation

- Keep documentation for linking GA4 and BigQuery

- Privacy, privacy, privacy

- Address policy conflicts

1. Ensure You Have the Correct Access

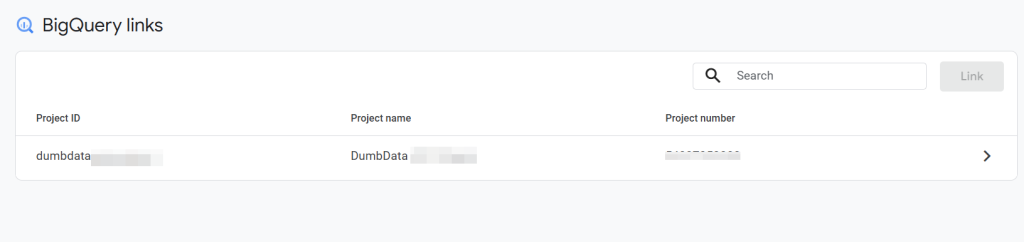

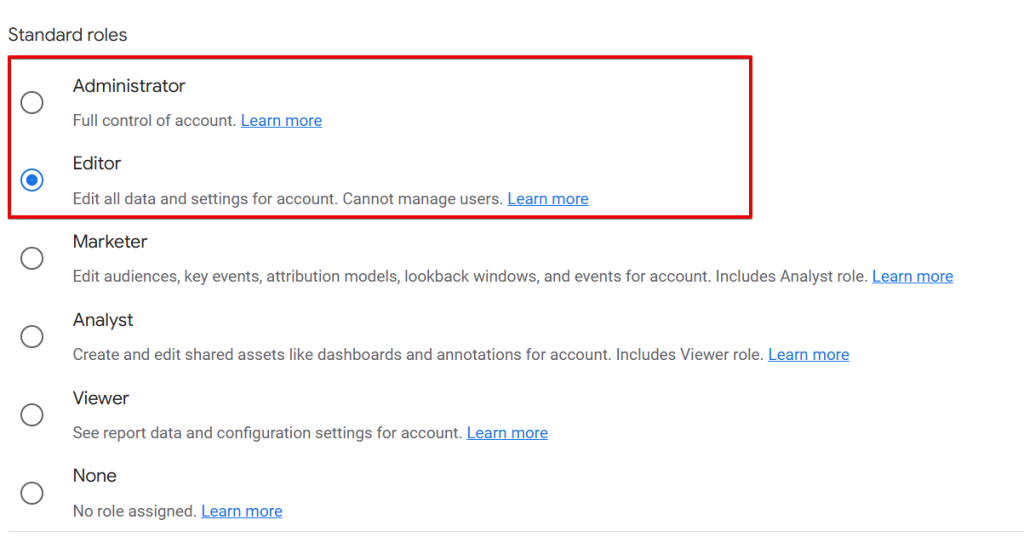

It’s essential to confirm that you have the correct access permissions in both Google Analytics and Google Cloud, specifically for the BigQuery project you intend to use for data export.

For Google Analytics, you need an “Editor” or higher-level access to the property to link it to the BigQuery project.

On the Google Cloud side, you must use an email address with Owner-level access to the BigQuery project.

You can proceed to the next steps once you have verified your access rights.

2. Set Up Billing Details

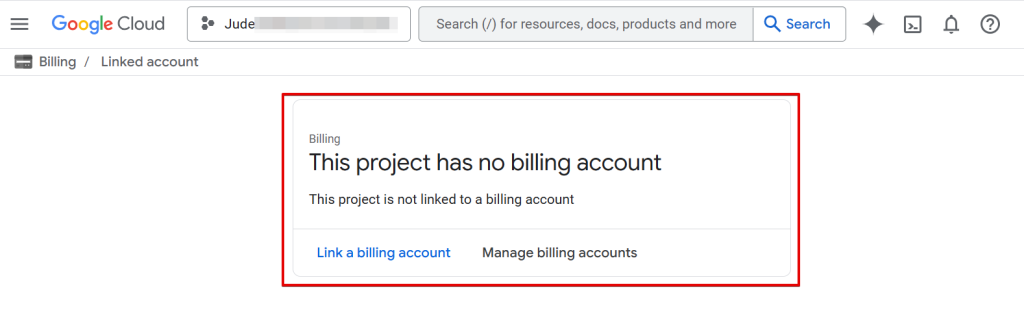

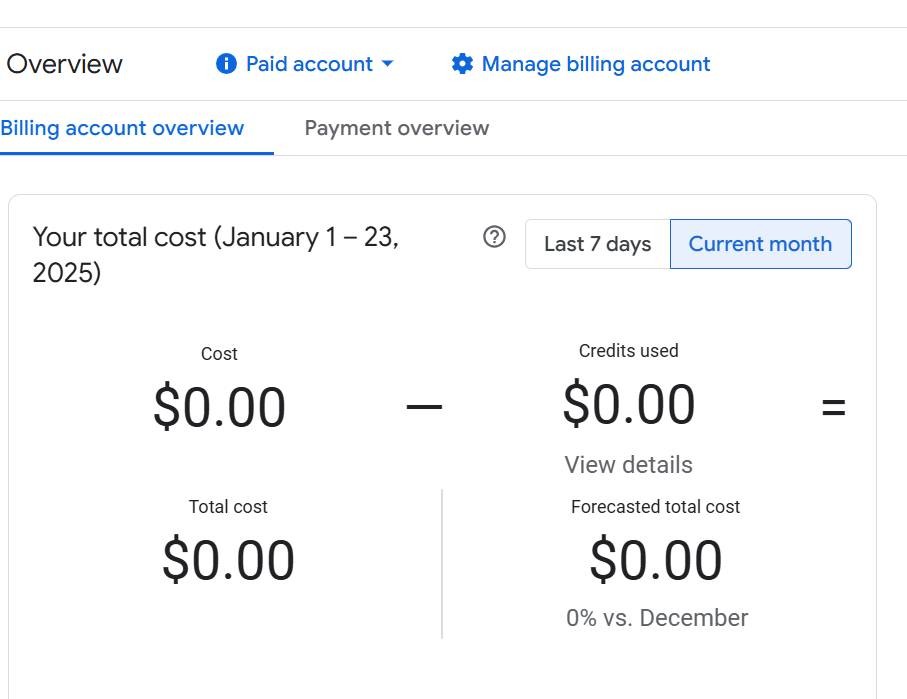

An important step is to add billing details to your Google Cloud account, which upgrades your BigQuery account to a billing-enabled one.

By adding billing information, you prevent your raw GA4 event data from being exported to the BigQuery sandbox environment, where your exported tables may expire after 60 days.

(Note: Even if you add billing details while in the sandbox environment, the expiration date still applies unless you manually adjust the table expiration settings.)

When inputting billing details, make sure to provide a valid payment method. If your Google Analytics data export to your BigQuery project is disrupted due to an invalid payment, it cannot re-export the data for the timeframe during which the payment validation failed.

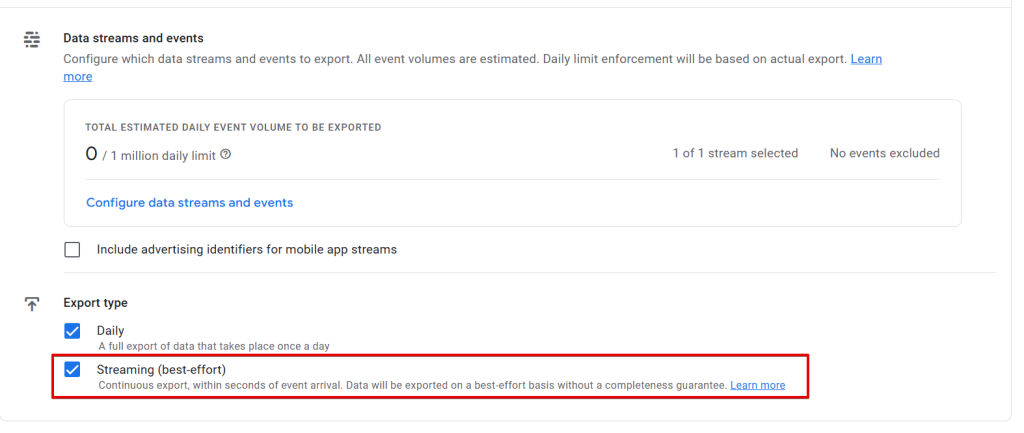

A billing-enabled account helps you enable the data streaming option in your Google Analytics data export configuration.

The BigQuery streaming export makes current-day data available within minutes, rather than having to wait until the end of the day to access raw event data.

By enabling streaming exports, BigQuery provides more up-to-date information to analyze user behaviour and traffic and create real-time insights in Looker Studio or similar tools.

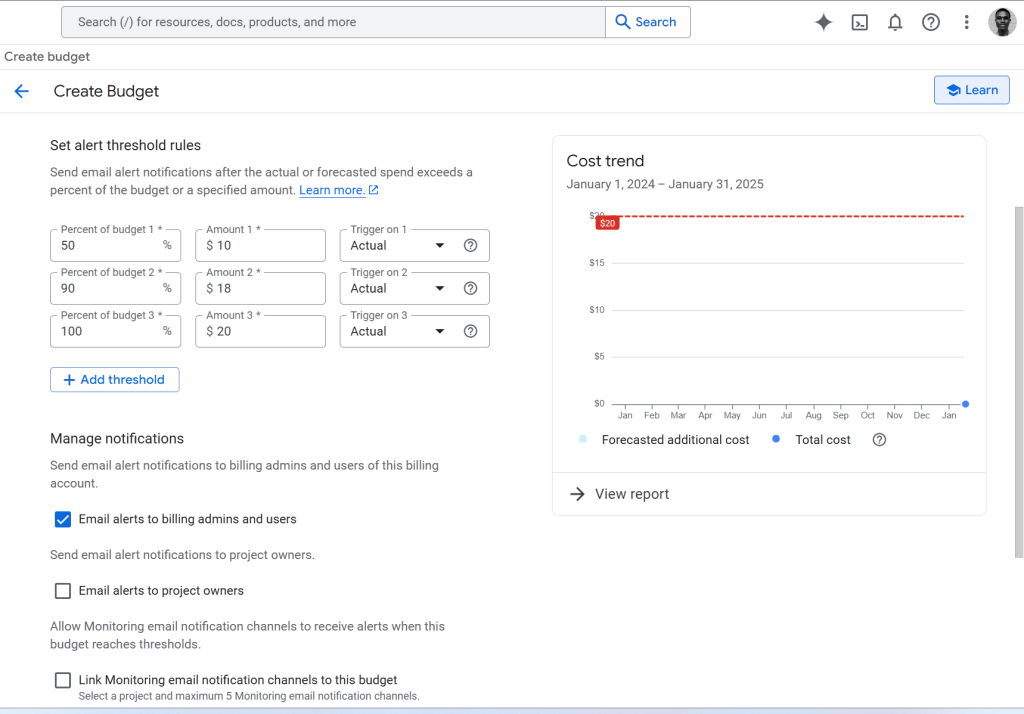

3. Establish a BigQuery Budget & Alert

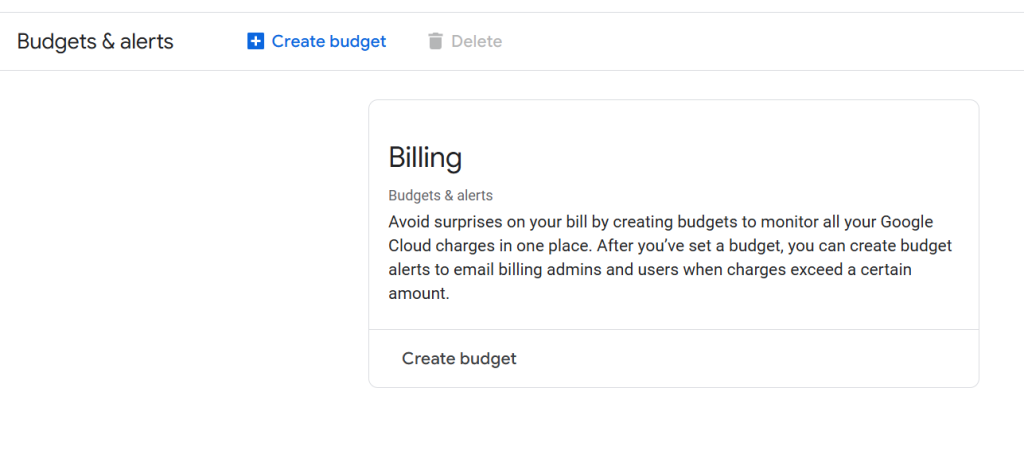

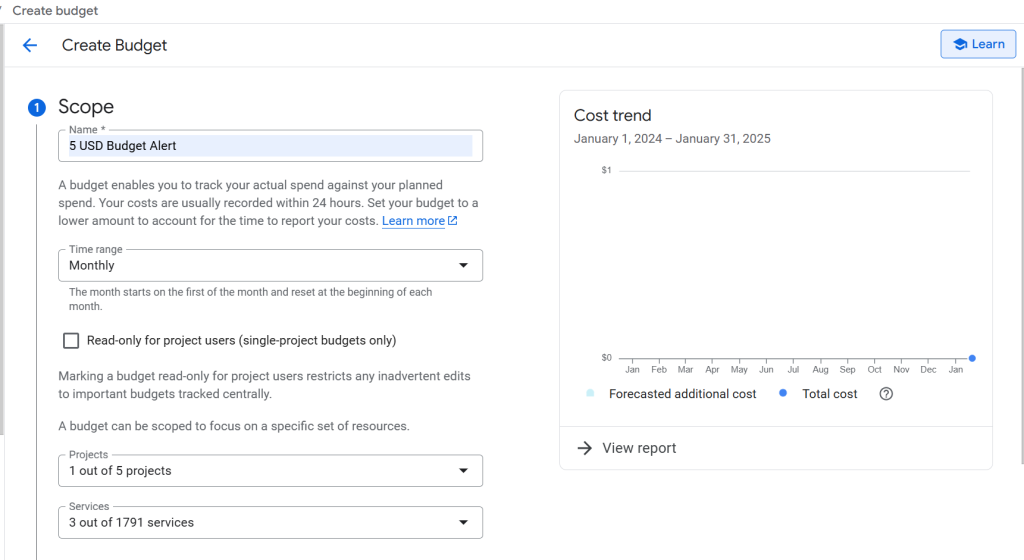

In addition to setting up billing, it’s crucial to configure a budget and alerts in Google Cloud for your BigQuery project.

The “Budgets & Alerts” feature helps you avoid unexpected charges by monitoring your usage across Google Cloud services.

By setting a budget, you can track your charges and prevent surprises. After creating a budget, configure alert notifications to email you, billing administrators, or other designated users when costs exceed a set amount.

This proactive financial measure helps you manage your Google Cloud expenditures effectively.

4. Deregister High Cardinality Dimensions in Google Analytics

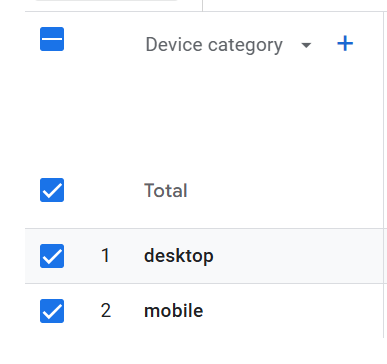

In Google Analytics (GA4), cardinality refers to the number of unique values assigned to an analytics dimension.

For example, the Device dimension might have three possible values (desktop, tablet, mobile), so the cardinality of the Device dimension is 3.

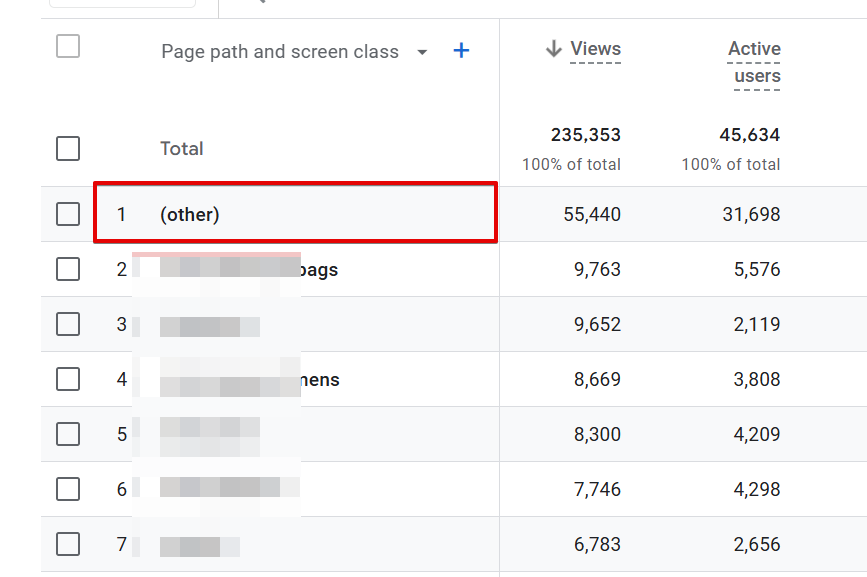

Cardinality itself is generally not an issue. However, problems arise with high-cardinality dimensions, those with more than 500 unique values in a single day.

High-cardinality dimensions can impact your reports by increasing the number of rows, which can cause a report to hit its row limit. When this happens, any data beyond the limit will be condensed into the “Other” row, resulting in potential data incompleteness or misinterpretation.

Before setting up the GA4 data export to BigQuery, it’s advisable to check if any of your custom dimensions fall into the high-cardinality category and are being used to capture additional context for GA4 events. If so, consider deregistering these dimensions, as they will still be available in the raw event data exported to BigQuery.

For instance, if you’re capturing the “client_id” as a custom dimension, you could deregister it in GA4. Although you won’t be able to use the dimension in GA4 reports, it will still be accessible in the BigQuery export.

An additional benefit of deregistering high-cardinality custom dimensions is that it frees up space in the quota allocated by Google for each property.

5. Check for Duplicate Events

It’s nice to check for duplicate events, and what I mean by duplicate events is events that represent the same action but are named differently.

This often overlooked step can prevent several issues.

Why is this necessary?

Checking for duplicate events helps you avoid exceeding your daily data export limit and can reduce streaming costs, particularly for high-traffic websites with large volumes of daily visitors.

Standard GA4 properties have a BigQuery export limit of 1 million events for daily (batch) exports, while GA4 360 properties have a limit of billions of events. However, there is no limit on the number of events for streaming exports.

If duplicate events are consistently collected in your property and exceed the export limit, your daily BigQuery export will be paused, and previous days’ exports won’t be reprocessed.

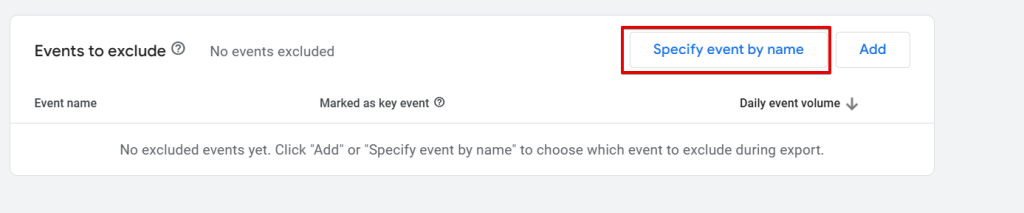

If you must continue collecting duplicate events for any reason, make sure to configure the GA4 BigQuery data export filtering options to stay within the limit.

In addition to identifying and removing duplicate events, consider removing any unnecessary GA4 events you’re tracking or filtering out specific events when exporting data to your BigQuery project.

For example, suppose you’re capturing an event after a page view solely to send the user’s client ID as a parameter to your analytics property. In that case, you can either remove the event or continue using it but filter it out during the BigQuery export configuration.

Finally, check for events that are triggered multiple times by a single action. This isn’t just important for linking Google Analytics to BigQuery; it also impacts data quality and can skew your analysis.

You can read this article if you want to audit your Google Analytics (GA4) property or use the free GA4 audit tool linked here.

6. Document Your GA4 Event Implementation

Documenting the events you’re tracking in your GA4 property is crucial. While it’s not a requirement to set up GA4 data export to BigQuery, proper documentation is highly beneficial, especially if you’re not the one querying the data in BigQuery or working with other collaborators.

Having clear documentation allows analysts and team members to easily understand what each event is designed to track and the insights it is expected to provide.

This can streamline the reporting process and ensure everyone is on the same page regarding data collection.

There are various ways to document your GA4 events, but you can start with the Google Analytics event documentation worksheet we’ve created at DumbData.

7. Documentation for Linking GA4 and BigQuery

In addition to documenting event implementations, it’s also helpful to have a document outlining your GA4 and BigQuery linking configuration.

This documentation can be especially valuable for agencies working with multiple clients using Google Cloud Platform and GA4, as it makes configuration details easily shareable.

If you’re unsure what this document should look like or need help creating one from scratch, you can use our free Google Analytics 4 (GA4) x BigQuery Linking Worksheet, available at DumbData.

8. Privacy, Privacy, Privacy

Privacy is a critical consideration, and I strongly recommend ensuring the following privacy measures are in place:

- Obtain consent for the data you collect in GA4, especially if you are subject to GDPR or similar privacy regulations.

- Implement Personally Identifiable Information (PII) redaction in your GA4 property to prevent storing sensitive personal data in your BigQuery project.

- If you’re a healthcare entity in the U.S. covered by HIPAA, ensure that you request a Business Associate Agreement (BAA) for the Google Cloud project where you have the BigQuery project.

Ensuring these privacy checks are in place will help you stay compliant, safeguard sensitive user information, and prevent future unnecessary headaches caused by privacy and data deletion.

9. Policy Conflicts

While it is uncommon to encounter linking failures between GA4 and BigQuery, one possible cause is conflicts with Google Cloud organization policies.

Policy conflicts may arise in the following situations:

- Your organization’s policy prohibits exports to a specific data centre location (e.g., the United States). In this case, you would need to either select an allowed data location or modify your policy, if permissible.

- Your organization’s policy restricts service accounts from the domain from which you intend to export data. In this scenario, you would need to adjust the policy accordingly.

It’s essential to be aware of these policies, especially if your organization is a heavy user of GCP, and factor this into your plan when linking GA4 and BigQuery.

Conclusion

In addition to the checks mentioned earlier, ensure you link Google Analytics and BigQuery early in the setup process so you can access historical analytics data within your BigQuery project.

Feel free to reach out if you need assistance with measurement or troubleshooting your GA4 analytics. Until then, happy linking your Google Analytics property and BigQuery!