Detailed Guide on Bot Traffic Exclusion In Microsoft Clarity

This article covers how Microsoft Clarity manages bot traffic and provides guidance on configuring your Clarity project to filter out such traffic. It also shows you the level of control you have when it comes to how bot visits to your websites get treated in Microsoft Clarity.

Although concise, this piece aims to provide helpful knowledge or, at the very least, offer an enjoyable read.

To quickly summarise, the process of excluding bot traffic in Microsoft Clarity is easy. Often, the necessary settings are enabled by default.

You should continue reading if you’d like to know the steps to check whether bot traffic exclusion is set up for your Microsoft Clarity project, learn how to configure it yourself and also hear my argument about “bot visits” when it comes to using tools like Microsoft Clarity.

In addition, you’ll learn why you still see visits and behavioural data on the “gtm-msr.appspot.com” domain even after configuring “bot filtering” for your Clarity project and what you can do about this.

First, however, let’s understand what bot traffic is and why it is essential to filter it from your Microsoft Clarity project.

What Exactly is Bot Traffic?

Bot traffic refers to visits to a website by automated machine programs rather than actual human users.

While the term often conjures images of malicious or spammy traffic, it’s essential to recognise that not all bots are harmful. Search engines like Google and Bing use bots to crawl and index web pages, which aids in displaying search results.

It’s crucial to distinguish between beneficial bots, which can enhance your site’s visibility and functionality, and malicious bots, which can degrade performance and security. You can ensure your website operates optimally and securely by effectively managing bot traffic.

Why Exclude Bot Visits in Microsoft Clarity & Their Impact on Your Analytics Data

I have often seen conversations in the marketing analytics community about the value of understanding bot behaviour. However, for a digital experience analytics tool like Microsoft Clarity, whose fundamental purpose is to help you understand how real users behave on your website and gauge the quality of their digital experiences, it is crucial to filter out bot traffic since you could easily use a traffic logging tool for such a purpose.

This ensures that the insights you gather are based on actual user activities, allowing you to optimise your website effectively.

It’s crucial to understand that bot visits differ significantly from human interactions on your website, and relying on such data for insights and decision-making can lead to skewed perceptions.

For instance, bots can distort the effectiveness of traffic sources, potentially misleading you into overvaluing certain marketing acquisition channels for acquiring website visitors.

Bot traffic, being automated visits, can skew various metrics such as page views, scroll depth, bounce rate, time on site, and conversion rates.

For example, if a bot generates hundreds of page visits and scrolls to the bottom of each page, it could falsely inflate the reported average scroll depth. Similarly, a bot that visits multiple pages could erroneously suggest a high engagement level by showing increased pages per session.

These inaccuracies can mislead marketing, UX, CRO, product and engineering teams into making decisions that do not reflect your business’s actual user experience when on your website.

Take scenarios where bots repeatedly engage in a click activity with non-link elements on a page; this can inflate the percentage of “Rage Clicks” reported in Microsoft Clarity. Consequently, such bot interactions can obscure the impact of website changes or lead to misguided investments in features irrelevant to your true users and business objectives.

It’s also important to remember that these bot visits can potentially cause your “Smart Events” to execute falsely.

How to Filter Bot Traffic in Microsoft Clarity

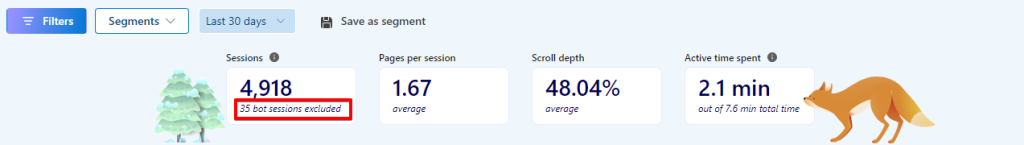

Microsoft Clarity automatically excludes bot traffic by default. To verify that this feature is active for your project, you can check the “Dashboard” interface.

Here, the aggregated “Sessions” scorecard will display a message indicating the number of bot sessions excluded from your report.

If this message is absent, you should double-check if you have bot traffic excluded (although it’s normal for the message not to be displayed even if you have bot filtering enabled for that project).

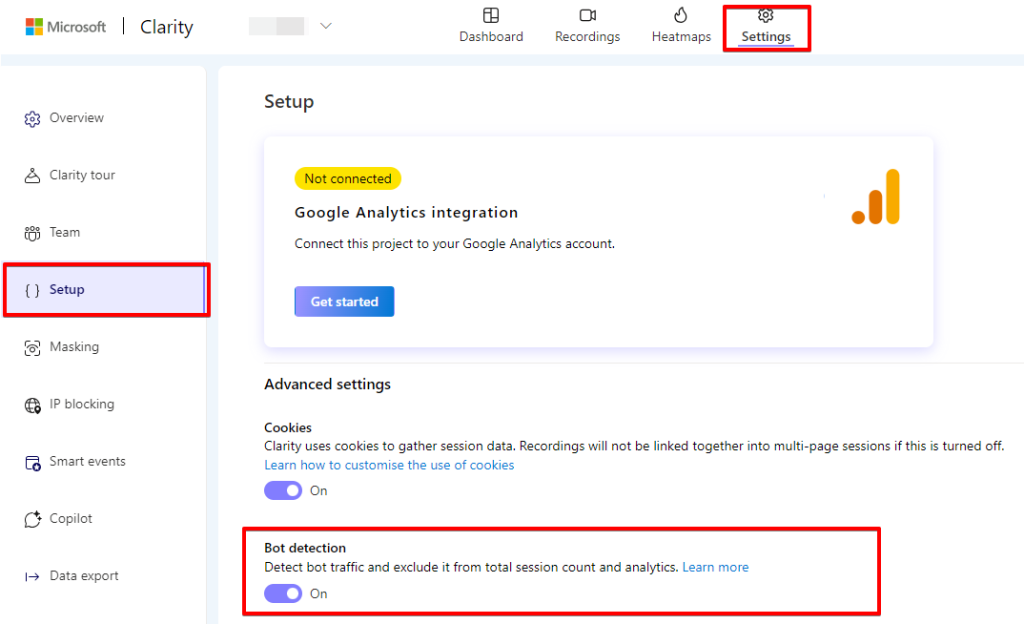

To double-check, navigate to the “Settings” interface and then to the “Setup” tab. Here, you can confirm whether the “bot detection” feature is enabled for your Clarity project.

While Clarity’s default setting detects and excludes bot traffic, you, as a user, have the control to decide what Microsoft Clarity should do about bot traffic by using the “Bot detection” settings.

Turning off bot detection isn’t advisable, but if you find it necessary, probably because you want to capture a complete view of all traffic, including bots, to understand the full scope of sessions on your site. However, be aware that this could lead to distorted insights due to the inclusion of non-human interactions.

Microsoft Clarity’s bot filtering capability extends across your dashboard, heatmaps, and session recordings, ensuring that the data you see is as relevant and accurate as possible regarding human user behaviour.

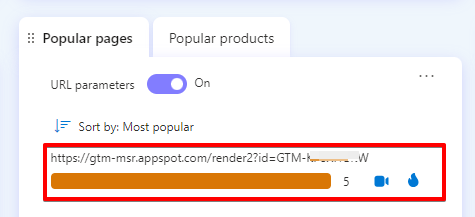

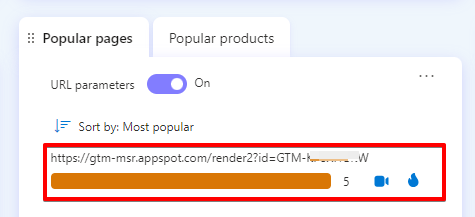

Why Do I Still See “gtm-msr.appspot.com” Traffic Data?

You might wonder why visits from “gtm-msr.appspot.com” appear in your data, even with “bot detection” activated in your Microsoft Clarity project.

Well, it’s normal and occurs if you have Google Tag Manager (GTM) installed on your website and your Microsoft Clarity project implemented within that GTM container.

Due to the nature of the incoming data, Clarity may occasionally display bot visits from “gtm-msr.appspot.com,” which is a subdomain associated with GTM.

There are several reasons why you might observe visits from “gtm-msr.appspot.com”:

GTM Debugging: This subdomain gets used for debugging activities when you modify GTM tags and test your GTM version using GTM’s preview and debug mode.

Security Scans: Google may use this subdomain to conduct scans on your GTM tags for malware or security vulnerabilities.

Non-JavaScript Browsers: If a visitor to your site has JavaScript disabled, GTM will use an iframe from this subdomain to load certain tags.

Typically, seeing “gtm-msr.appspot.com” in your reports is not a cause for concern. It’s an expected behaviour when using GTM.

You can configure your project to avoid collecting analytics data during scans by using one of the following options:

Option 1:

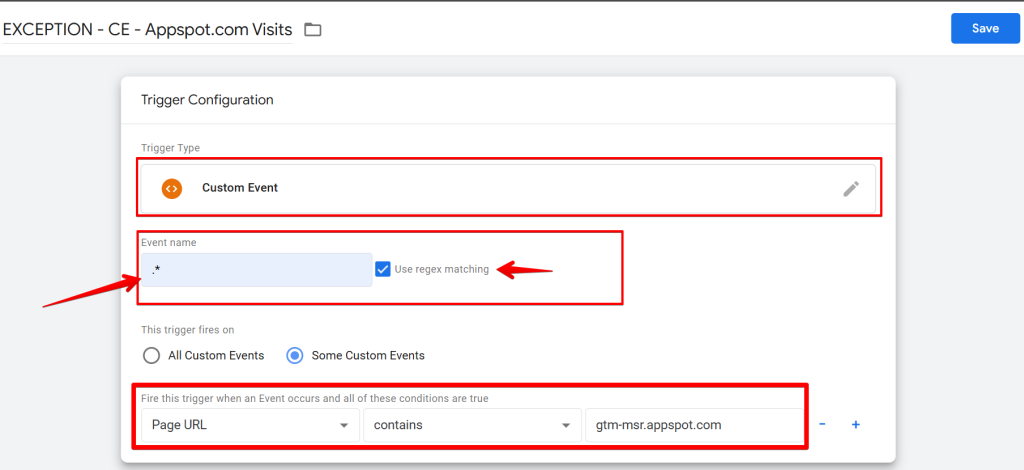

Create a simple exception trigger with the “Custom Event” trigger type in Google Tag Manager.

Enable regex and enter `.*` in the “event name” field. Then, add a trigger condition to execute only on page URLs containing “gtm-msr.appspot.com” as shown in the Google Tag Manager trigger configuration image below.

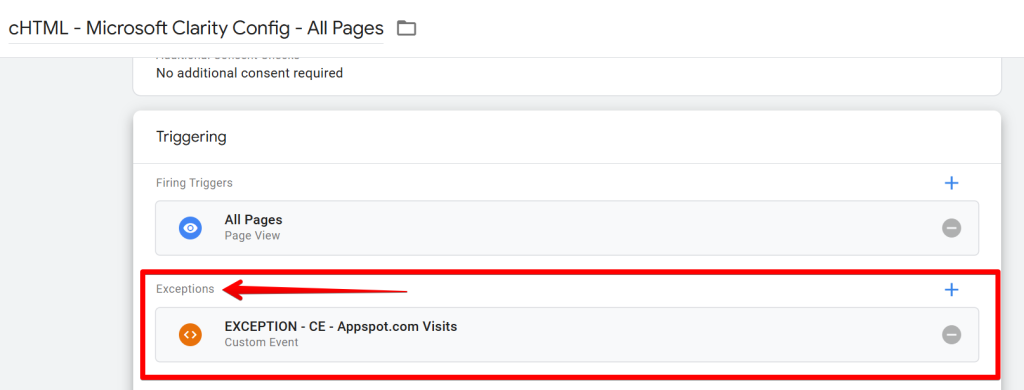

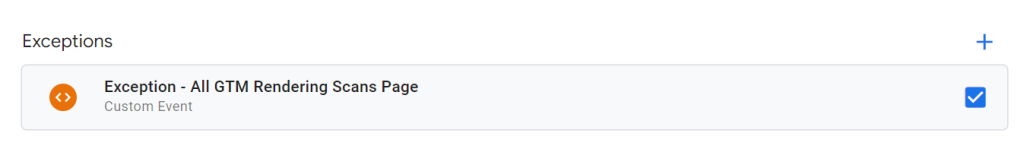

Attach this as an exception to all Microsoft Clarity tags, including “Custom Tags,” “Custom Identifiers,” and “API Events.”

Alternatively, if you’d like a more robust solution beyond just the page URL, see Option 2.

Option 2:

This advanced option prevents your Microsoft Clarity project from recording visits originating from “gtm-msr.appspot.com” and if the session referral was also from the scan. To set it up, follow the steps below:

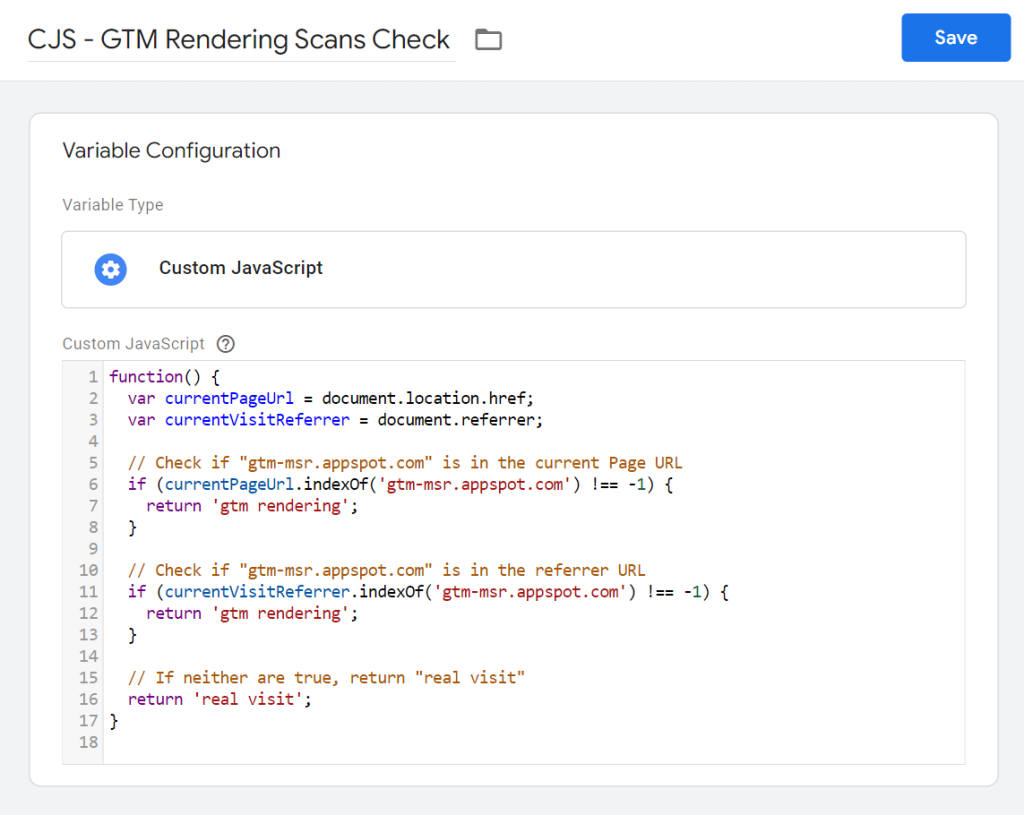

- Create a “Custom JavaScript” variable in your Google Tag Manager (GTM) container, then copy and paste the following code into the variable:

function() {

var currentPageUrl = document.location.href;

var currentVisitReferrer = document.referrer;

// Check if "gtm-msr.appspot.com" is in the current Page URL

if (currentPageUrl.indexOf('gtm-msr.appspot.com') !== -1) {

return 'gtm rendering';

}

// Check if "gtm-msr.appspot.com" is in the referrer URL

if (currentVisitReferrer.indexOf('gtm-msr.appspot.com') !== -1) {

return 'gtm rendering';

}

// If neither are true, return "real visit"

return 'real visit';

}

The code works by checking if data collection is occurring on the domain “gtm-msr.appspot.com” (associated with Google Tag Manager).

If so, it returns “gtm rendering.” If not, it performs another check to confirm whether the visit is from “gtm-msr.appspot.com.”

If that check is positive, it returns “gtm rendering” again. If both checks are false, it returns “real visit.”

Note: You can modify the code to check for “appspot.com” instead.

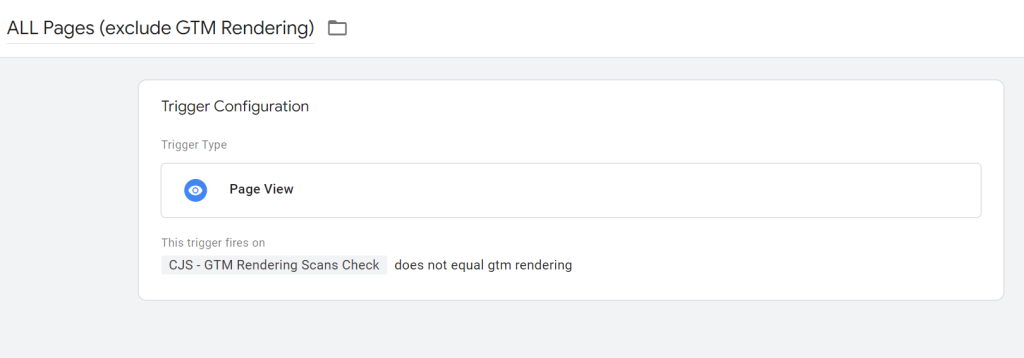

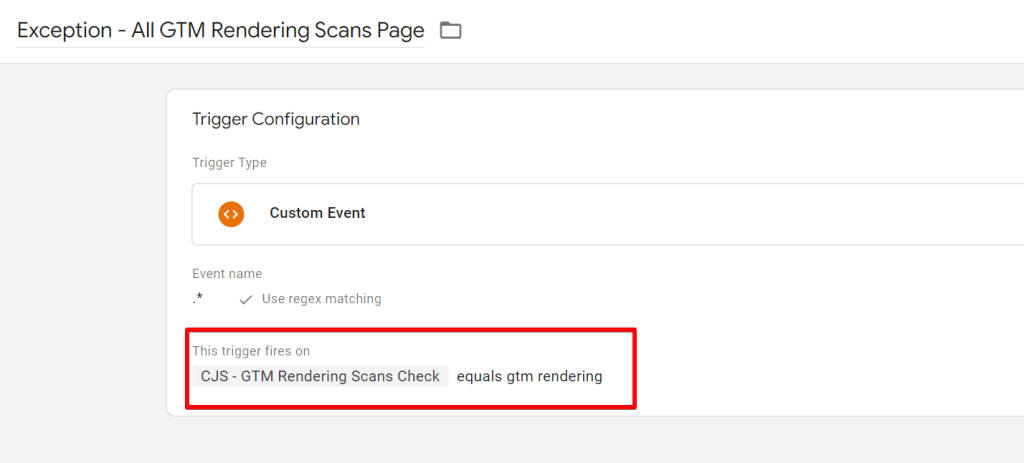

- The next step is to incorporate this condition into the trigger that fires your Microsoft Clarity tags, as shown in the image below:

Alternatively, you can create a new exception trigger and apply it across all your Microsoft Clarity tags, as well as other media tags and pixels. This approach might be more efficient:

Either method will achieve the same result.

The Wrap

Not all bots are evil, yet their presence can certainly complicate our analysis, leading to decisions that do not accurately reflect the behaviour of our real users.

Reflecting on what I’ve covered so far, in this article, we have explored how Microsoft Clarity naturally handles bot traffic, discussed methods to verify if you’ve turned on the bot detection feature in your project, and shown you the control options available within Microsoft Clarity regarding the management of bot traffic.

If you’re looking for other interesting pieces about Microsoft Clarity, check out my guide on “Custom Tags” and some innovative ways of using it. If Clarity is a new tool for you, then check out this detailed and comprehensive guide about Microsoft Clarity.

Here, I’ll call it a wrap, and I’d like to know what you think about the Clarity “Bot Detection” feature. You can share your thoughts with me via LinkedIn. Until then, happy measuring.